Probability literacy helps citizens judge claims, weigh risks, and spot nonsense fast. It turns numbers on dashboards into decisions you can defend. Elections, public health, finance, even weather all speak the language of uncertainty. Learn the basics and you stop arguing about certainties that do not exist. You start asking better questions and you act with more confidence.

Why probability literacy matters for elections and daily decisions

Headlines often frame outcomes as yes or no. Real life works on chances. A candidate with a 70 percent chance can still lose, just as a forecast with a 20 percent chance can still hit. Understanding this saves you from calling normal variance a scandal. It also reduces overreactions after a single poll or a single bad day in the markets. With probability literacy you read margins and ranges, not only point estimates. You balance costs and benefits under uncertainty, which is how responsible policy and household planning should work. Good citizens evaluate evidence, update beliefs, and avoid conspiracy thinking when unlikely events happen.

The core toolkit: percentages, odds, and risk

Percentages tell you frequency in a large number of trials. Odds describe the ratio between success and failure. Convert with a simple rule: odds equals p divided by 1 minus p. A 60 percent chance converts to 0.6 divided by 0.4 which equals 1.5 to 1. Ranges matter more than a single number, because the same probability plays out differently across repeated events. Risk equals probability multiplied by impact. Small chance with huge impact deserves attention, while high chance with minor impact might be tolerable. This lens keeps priorities straight.

Margins of error and confidence intervals

Polls estimate, they do not certify. A margin of error describes sampling noise, not total uncertainty. If a poll says 48 percent with a margin of error of 3 points, the plausible range sits roughly from 45 to 51, assuming no systematic bias. Confidence intervals expand this idea to any estimate, for example turnout or vote share. Overlapping intervals mean the poll cannot reliably separate candidates. Non sampling errors can be larger than the margin, so smart readers check method notes, response rates, and weighting. One poll is weak evidence. A well built average reduces random swings and gives a cleaner signal.

Bayes in plain language: how to update beliefs

Start with a prior belief, then adjust with new data. If early evidence suggests a candidate is competitive in a district that usually leans the other way, your prior should still anchor you. New polls shift your view by how credible they are and how strongly they point. Big shifts require strong, repeated evidence. Small, noisy signals should move you less. This is Bayesian updating in simple terms. It prevents both stubbornness and whiplash. You give new facts a fair hearing without forgetting what you already know.

Randomness, fairness, and verifiability

Random processes power polling samples, audit draws, and risk tests. Citizens should know what makes randomness credible. Seeding, hashing, and public verification allow anyone to check that results were not cherry picked. Consumer platforms have helped popularize this idea through provably fair systems. Public examples such as BC Game Spain publish the ingredients of randomness so users can verify outcomes after the fact. The civic lesson is practical. If a lottery, audit, or recount uses random selection, the recipe should be public and independently checkable. Trust grows when verification is possible.

Simulation and why one number is not enough

Monte Carlo simulation runs a model thousands of times to show a distribution of outcomes. You might see a candidate win 7 out of 10 runs, which implies a 30 percent chance of losing. That loss will happen three times out of ten. Seeing the full distribution helps you plan for tails. Campaigns allocate resources with that in mind. Households can do the same for savings, insurance, and large purchases. A single forecast point hides risk. A distribution makes risk visible.

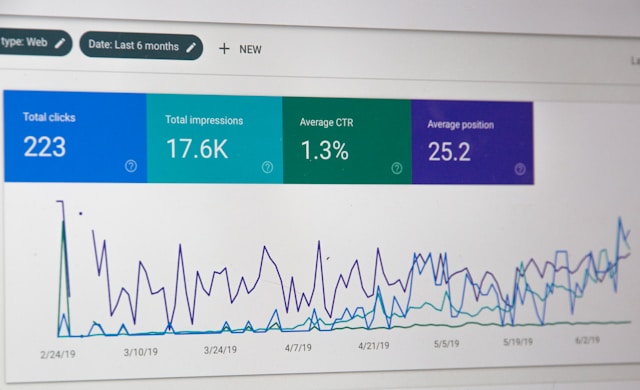

Reading charts and dashboards without getting fooled

Check axes and baselines. A chart that starts at 90 exaggerates small changes. Look for sample size on any figure that uses averages. Sparse bins produce misleading spikes. On maps, area is not population, so normalize by voters or households. When you see a big swing, ask if methodology changed. If the method changed, the swing may not be real. Legends, units, and time windows must align with the claim in the caption. If they do not align, treat the claim as unproven.

Correlation, causation, and common traps

Two variables can move together without one causing the other. Elections create many moving parts at once, so spurious links are easy to find. Use controlled comparisons. Match precincts or voters on key attributes before comparing outcomes. Beware survivorship bias, which ignores places that dropped out of the data. Beware base rate neglect, which forgets how common a group or outcome is before new evidence arrives. These traps inflate confidence while adding no real knowledge.

Practical numeracy for everyday choices

Translate probabilities into frequencies. A 2 percent risk equals 2 in 100. People feel frequencies more clearly than decimals. Compare options by expected value, not by slogans. If a policy reduces a small risk across millions of people, the total benefit can be large. If a choice raises a large risk for a small group, weigh that distribution honestly. Communicate uncertainty with plain language and ranges, then record what would change your mind. That note keeps debates honest.

A citizen checklist for probability claims

- Identify the outcome and time frame.

- Note the baseline rate before new information.

- Ask for the range, not only the point.

- Check the sample, method, and possible biases.

- Convert to frequencies where possible.

- Consider impact, not only chance.

- Update beliefs when credible evidence arrives.

- Prefer verifiable processes over opaque ones.

Simple classroom and newsroom activities

Run a coin flip tournament to show streaks and regression to the mean. Draw random samples from a jar and chart how estimates stabilize with larger sizes. Build a small simulation for turnout and show how uncertainty widens when inputs are noisy. Publish the seed and steps so anyone can replicate. Invite readers to rerun the steps on their own device. This habit not only teaches probability literacy, it models the transparency citizens should expect from public institutions.

Probability literacy is not abstract theory. It is a civic skill you can practice every week. Treat numbers as evidence with uncertainty, not proofs. Ask for methods, verify where possible, and keep a steady process for updating what you think is true.